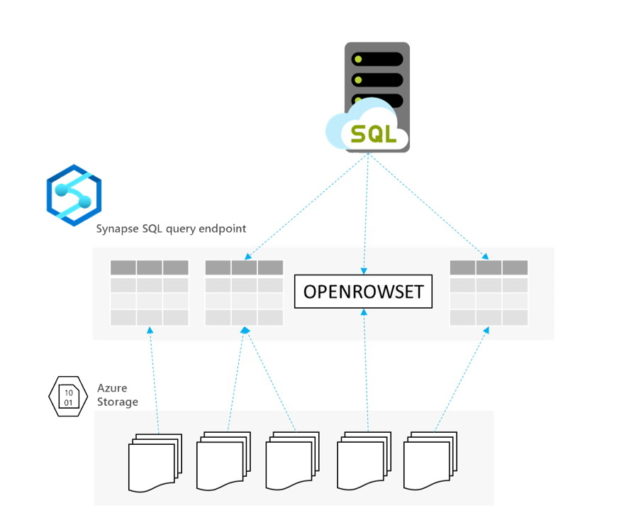

In this blog post I try to demystify how to setup an environment that utilizes Azure Synapse Serverless, Delta Lake on ADLS Gen2, and SQL Managed Instances to enable you to query your delta lake with T-SQL as if it were any other SQL source in order to accomplish something like polybase.

Jovan Popovic has a write up that is about a year old at the time of writing this blog, that does a pretty good job of explaining the thought behind this. We came across it recently when searching for a solution to connecting a few legacy bespoke applications to a data source that wasn’t SQL or accessible via ODBC.

At the inception of the project to move an on-premise data warehouse to the modern data lakehouse architecture, Synapse wasn’t on the table because of 1) the small size of data in the current data warehouse, 2) the rate of growth being too slow, and 3) cost (always), but with the newer offering of Synapse Serverless Pools, it was now worth the exploration. This and there wasn’t any other way we could think of accessing the delta lake data other than replicating it to a SQL database; which would add too much latency to the operational reports that said data supported.

Jovan’s post goes into some of the detail of how you can setup a similar architecture to read file types like parquet and csv, but does not touch on the delta format or how you setup security and authentication or how you can create CETAs or VIEWs of these data. I’ll try to offer the detail we landed on with our solution.

The beauty of this solution is that you do not incur any charges for using Synapse until you read data. Then you are billed $5/TB of data processed. This is perfect for low usage operational reports or analytics that can take a bit more time to process than they would from a SQL table. So how do you set this all up?

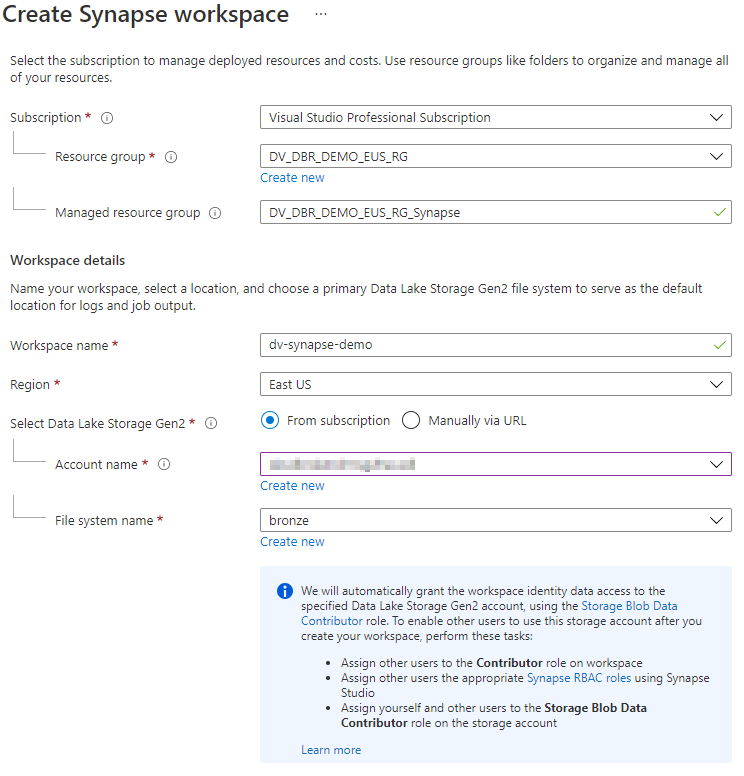

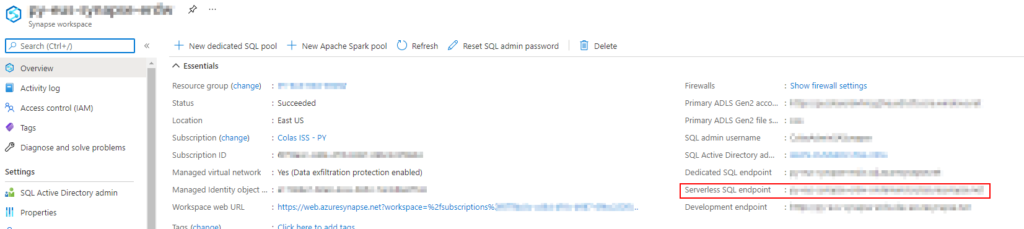

First of all you need to provision a Synapse Workspace if you do not already have one.

The thing to mention here is Azure creates a Managed Identity for Synapse that has access as a Storage Blob Data Contributor to the ADLS Gen2 account you select. This is important later on when we setup the security that is used when making CETAs or VIEWs to the Delta Lake.

Once you have a Synapse Workspace provisioned you can either create a database in the workspace or connect to the Serverless SQL Pool Via SSMS and use a CREATE DATABASE command there. We’ll use “aDatabase” for this example.

You’ll notice on a serverless database you can only create external schemas, tables, and views. In addition to that, you can also create stored procedures and functions via SSMS but not in the workspace.

Once you have your database created, you will need to add a Master Key in order to create credentials and external data sources. Password is optional here.

CREATE MASTER KEY -- ENCRYPTION BY PASSWORD ='<YourPasswordHere>'Once created you will need to a Database Scoped Credential based on the Managed Identity that was created when you provisioned the workspace.

--An SAS key can be used but the Managed Identity is easier to assign to other ADLS if needed

CREATE DATABASE SCOPED CREDENTIAL [dw_adls]

WITH IDENTITY='Managed Identity'

Next you will create an External Data Source to the storage account and container you wish to create external tables or views over.

CREATE EXTERNAL DATA SOURCE dw_adls_silver

WITH

( LOCATION = 'https://<YourStorageAccountName>.blob.core.windows.net/silver/'

,CREDENTIAL = dw_adls

) From here you can create an External File Format for delta if you want to enforce schema.

CREATE EXTERNAL FILE FORMAT DeltaLakeFormat WITH ( FORMAT_TYPE = DELTA );Or you can create a view over a Delta table. Here we have our delta table prefixed with a database name (deltaLake), but are creating a view over it in the dbo schema. You could probably cast types here if you wanted, but we did not try that.

use aDatabase

create view dbo.aTable

as

select *

from openrowset(

bulk 'deltaLake.aTable',

data_source = 'dw_adls_silver',

FORMAT = 'delta'

) as aTableUp until this point these objects are contained within a serverless SQL database, but what if you want to use them with other data as you’d be able to with polybase?

Here’s where things get cool.

Since we are using Synapse Serverless as a vehicle for reading delta lake files and no data actually resides on the database, we will need a way to access its data as well as other data we might want to combine with it. Here is where a SQL Managed Instance comes into play.

You will want to create a Login and database User on the Synapse database you just created that will be used as the security context when setting up a linked server from your managed instance. Once you’ve done that you will need to grant reference access to the database scoped identity you created at the beginning of this process.

GRANT REFERENCES ON DATABASE SCOPED CREDENTIAL::[dw_adls] TO [SQLLinkedSrvAcct]If you don’t do this, you will get an error on the managed instance about not having access to the underlying storage.

Once you’ve created a linked server on your managed instance you will be able to query external delta lake tables via a four part name and even combine the data with other tables or views that reside on the managed instance!

SELECT a.SomeField,

b.SomeOtherField,

FROM ManagedInstanceDB.dbo.SomeTable a

JOIN SynapseLinkedServer.aDatabase.dbo.aTable b

ON a.key = b.keyJust like anything that interacts with parquet files, you will probably want to setup partitioning and use things like predicate pushdown to filter out as many files as you can before the query scans any data. The above example would probably incur more cost than if you added a where clause or a join condition that does some filtering based on partition.