Build Pipeline

In the last three posts we’ve covered the why and how of this approach. We’ve successfully built a notebook that can use different databases to conduct transformations from and either export to a CSV file or write to Delta Lake. Now, we need to incorporate both of these objects into a CI/CD process.

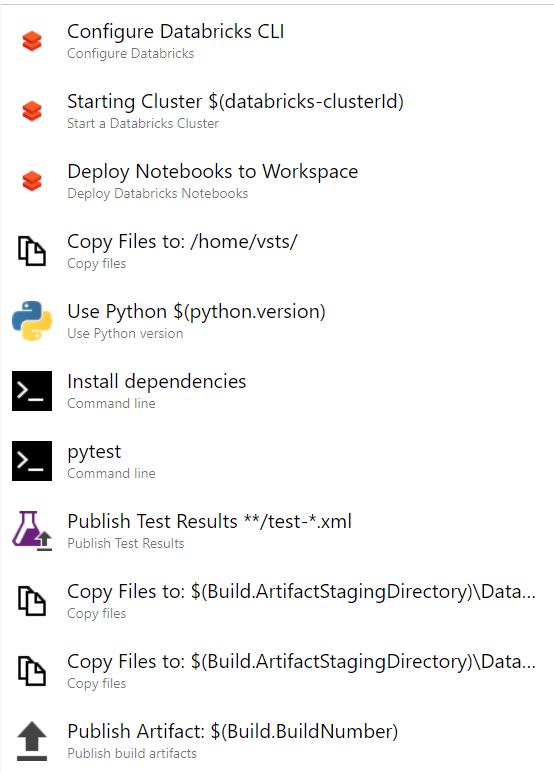

To easier illustrate the process this pipeline was built in the classis editor but a YAML file is available in the GitHub repository for this project.

In the complete architecture this project is a part of, these notebooks are deployed and tested in a CI environment where automated tests are ran. The following pattern is typical to most build pipelines in the complete solution. Code is deployed to the CI environment and tests are ran from the build agent before publishing a build artifact.

Because the Python process is ran with databricks-connect, and interactive/all purpose cluster has to exist in the environment you are testing in. I would recommend either segregating a dev environment into different Databricks workspaces as well as a separate container in ADLS to test with if you do not have an all purpose cluster at your disposal in a separate environment. There may be a way build and deploy this process to dbfs and run on a job cluster, but that is not covered in this post. I’d be welcome to anyone’s suggestion on improving this approach to run on job clusters.

This pipeline is ran on an Ubuntu 16.04 agent. First we use the DevOps for Azure Databricks to do three tasks. We first configure the Databricks CLI, then we ensure our cluster is started, and finally deploy our notebooks.

Once we have our Databricks environment ready to run tests on, we need to configure the build agent with the appropriate requirements to run our tests. In order to do so, we first copy the .databricks-connect file /home/vsts/ in order for our Python code to configure itself to make a connection to our cluster and set the specific Python version based on what cluster you are using.

| Databricks Runtime version | Python version |

|---|---|

| 7.3 LTS ML, 7.3 LTS | 3.7 |

| 7.1 ML, 7.1 | 3.7 |

| 6.4 ML, 6.4 | 3.7 |

| 5.5 LTS ML | 3.6 |

| 5.5 LTS | 3.5 |

The next two tasks are used to upgrade pip and install the libraries in our requirements.txt file in the project:

python -m pip install --upgrade pip && pip install -r "requirements.txt"And then run our unit tests:

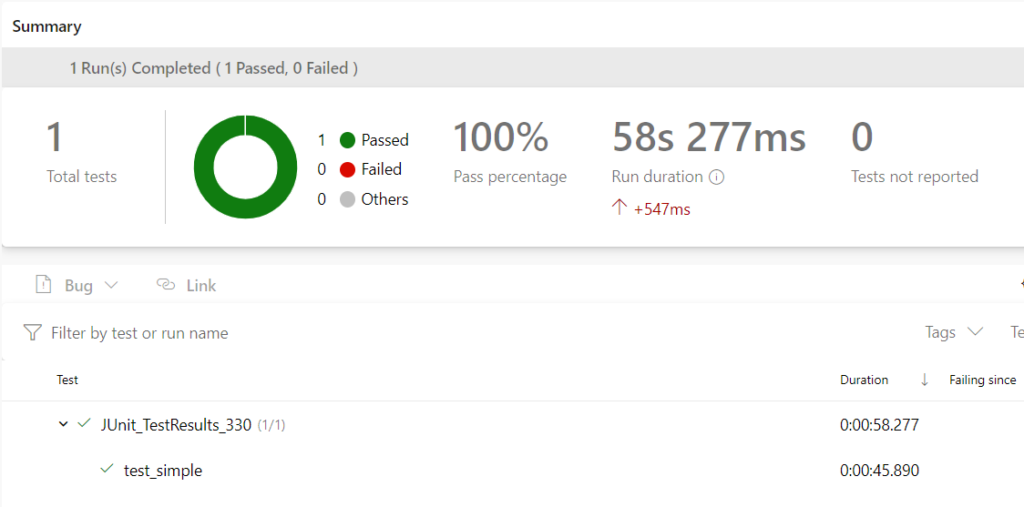

python -m pytest unittests --doctest-modules --junitxml=junit/test-results.xmlPyTest is ran on only the unittests directory and our results are outputted to a newly created junit/test-results.xml directory and file. This file is discovered and published by the next task in the pipeline.

At this point you can set the Publish Unit Tests task to either pass or fail based on the results of the unit test execution. From here we can stage the files we want in our build and publish them.

In our process we only take the Databricks notebooks and a few scripts for deploying jobs. From here we use the build artifact to deploy to our other environments.

And that’s it! Please let me know what you think of the approach in the comments.